Clip-as-service

제품 정보

오픈소스 사용 사례

공개 채팅

지원 계획

현재 사용할 수 있는 OSS 플랜이 없습니다.

저장소의 제공자 또는 기여자인 경우 OSS 플랜 추가를 시작할 수 있습니다.

OSS 플랜 추가이 오픈소스에 대한 플랜을 찾고 있다면 저희에게 문의해 주세요.

전문 공급자와 연락하실 수 있도록 도와드리겠습니다.

제품 세부 정보

CLIP-as-service is a low-latency high-scalability service for embedding images and text. It can be easily integrated as a microservice into neural search solutions.

⚡ Fast: Serve CLIP models with TensorRT, ONNX runtime and PyTorch w/o JIT with 800QPS[*]. Non-blocking duplex streaming on requests and responses, designed for large data and long-running tasks.

🫐 Elastic: Horizontally scale up and down multiple CLIP models on single GPU, with automatic load balancing.

🐥 Easy-to-use: No learning curve, minimalist design on client and server. Intuitive and consistent API for image and sentence embedding.

👒 Modern: Async client support. Easily switch between gRPC, HTTP, WebSocket protocols with TLS and compression.

🍱 Integration: Smooth integration with neural search ecosystem including Jina and DocArray. Build cross-modal and multi-modal solutions in no time.

[*] with default config (single replica, PyTorch no JIT) on GeForce RTX 3090.

Text & image embedding

| via HTTPS 🔐 | via gRPC 🔐⚡⚡ |

|

```bash

curl \

-X POST https:// |

```python

# pip install clip-client

from clip_client import Client

c = Client(

'grpcs:// |

Visual reasoning

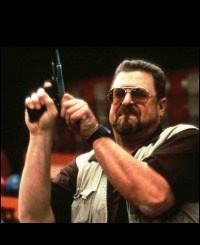

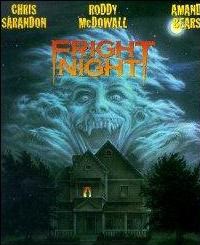

There are four basic visual reasoning skills: object recognition, object counting, color recognition, and spatial relation understanding. Let's try some:

You need to install

jq(a JSON processor) to prettify the results.

| Image | via HTTPS 🔐 |

|

|

```bash

curl \

-X POST https:// |

|

|

```bash

curl \

-X POST https:// |

|

|

```bash

curl \

-X POST https:// |

Documentation

Install

CLIP-as-service consists of two Python packages clip-server and clip-client that can be installed independently. Both require Python 3.7+.

Install server

| Pytorch Runtime ⚡ | ONNX Runtime ⚡⚡ | TensorRT Runtime ⚡⚡⚡ |

| ```bash pip install clip-server ``` | ```bash pip install "clip-server[onnx]" ``` | ```bash pip install nvidia-pyindex pip install "clip-server[tensorrt]" ``` |

You can also host the server on Google Colab, leveraging its free GPU/TPU.

Install client

pip install clip-clientQuick check

You can run a simple connectivity check after install.

| C/S | Command | Expect output |

|---|---|---|

| Server | ```bash python -m clip_server ``` |

|

| Client | ```python from clip_client import Client c = Client('grpc://0.0.0.0:23456') c.profile() ``` |

|

You can change 0.0.0.0 to the intranet or public IP address to test the connectivity over private and public network.

Get Started

Basic usage

- Start the server:

python -m clip_server. Remember its address and port. -

Create a client:

from clip_client import Client c = Client('grpc://0.0.0.0:51000') -

To get sentence embedding:

r = c.encode(['First do it', 'then do it right', 'then do it better']) print(r.shape) # [3, 512] -

To get image embedding:

r = c.encode(['apple.png', # local image 'https://clip-as-service.jina.ai/_static/favicon.png', # remote image 'data:image/gif;base64,R0lGODlhEAAQAMQAAORHHOVSKudfOulrSOp3WOyDZu6QdvCchPGolfO0o/XBs/fNwfjZ0frl3/zy7////wAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAACH5BAkAABAALAAAAAAQABAAAAVVICSOZGlCQAosJ6mu7fiyZeKqNKToQGDsM8hBADgUXoGAiqhSvp5QAnQKGIgUhwFUYLCVDFCrKUE1lBavAViFIDlTImbKC5Gm2hB0SlBCBMQiB0UjIQA7']) # in image URI print(r.shape) # [3, 512]

More comprehensive server and client user guides can be found in the docs.

Text-to-image cross-modal search in 10 lines

Let's build a text-to-image search using CLIP-as-service. Namely, a user can input a sentence and the program returns matching images. We'll use the Totally Looks Like dataset and DocArray package. Note that DocArray is included within clip-client as an upstream dependency, so you don't need to install it separately.

Load images

First we load images. You can simply pull them from Jina Cloud:

from docarray import DocumentArray

da = DocumentArray.pull('ttl-original', show_progress=True, local_cache=True)or download TTL dataset, unzip, load manually

Alternatively, you can go to [Totally Looks Like](https://sites.google.com/view/totally-looks-like-dataset) official website, unzip and load images: ```python from docarray import DocumentArray da = DocumentArray.from_files(['left/*.jpg', 'right/*.jpg']) ```The dataset contains 12,032 images, so it may take a while to pull. Once done, you can visualize it and get the first taste of those images:

da.plot_image_sprites()

![]()

Encode images

Start the server with python -m clip_server. Let's say it's at 0.0.0.0:51000 with GRPC protocol (you will get this information after running the server).

Create a Python client script:

from clip_client import Client

c = Client(server='grpc://0.0.0.0:51000')

da = c.encode(da, show_progress=True)Depending on your GPU and client-server network, it may take a while to embed 12K images. In my case, it took about two minutes.

Download the pre-encoded dataset

If you're impatient or don't have a GPU, waiting can be Hell. In this case, you can simply pull our pre-encoded image dataset: ```python from docarray import DocumentArray da = DocumentArray.pull('ttl-embedding', show_progress=True, local_cache=True) ```Search via sentence

Let's build a simple prompt to allow a user to type sentence:

while True:

vec = c.encode([input('sentence> ')])

r = da.find(query=vec, limit=9)

r[0].plot_image_sprites()Showcase

Now you can input arbitrary English sentences and view the top-9 matching images. Search is fast and instinctive. Let's have some fun:

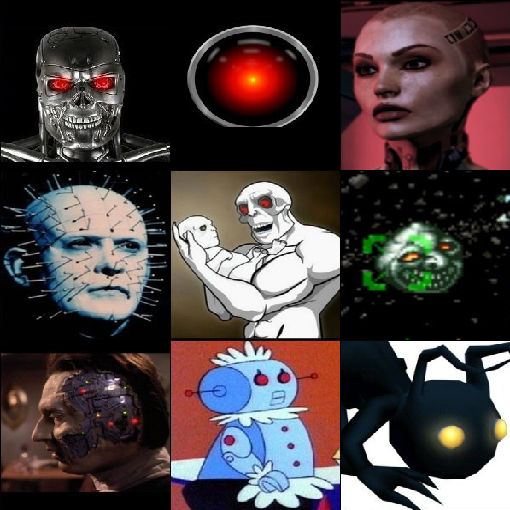

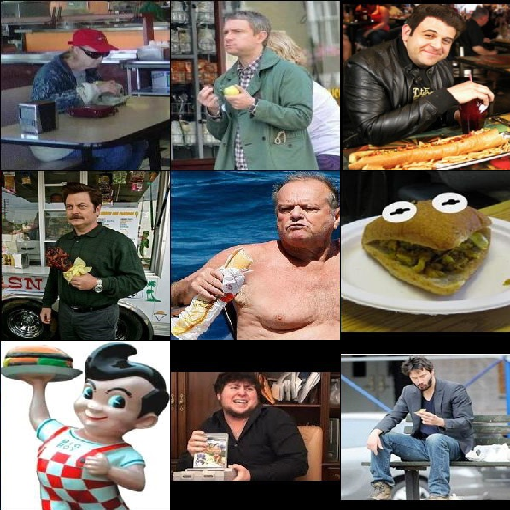

| "a happy potato" | "a super evil AI" | "a guy enjoying his burger" |

|---|---|---|

|

|

|

|

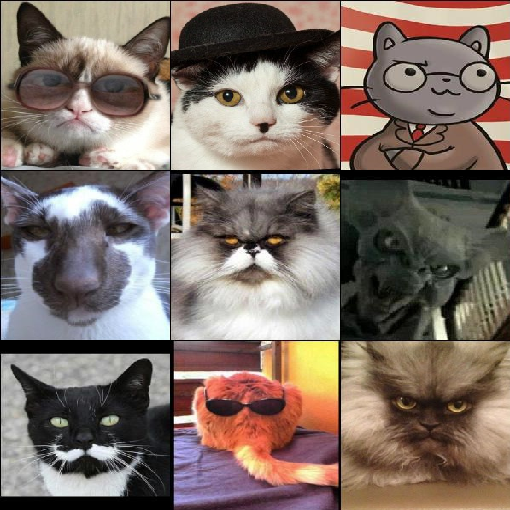

| "professor cat is very serious" | "an ego engineer lives with parent" | "there will be no tomorrow so lets eat unhealthy" |

|---|---|---|

|

|

|

|

Let's save the embedding result for our next example:

da.save_binary('ttl-image')Image-to-text cross-modal search in 10 Lines

We can also switch the input and output of the last program to achieve image-to-text search. Precisely, given a query image find the sentence that best describes the image.

Let's use all sentences from the book "Pride and Prejudice".

from docarray import Document, DocumentArray

d = Document(uri='https://www.gutenberg.org/files/1342/1342-0.txt').load_uri_to_text()

da = DocumentArray(

Document(text=s.strip()) for s in d.text.replace('\r\n', '').split('.') if s.strip()

)Let's look at what we got:

da.summary() Documents Summary

Length 6403

Homogenous Documents True

Common Attributes ('id', 'text')

Attributes Summary

Attribute Data type #Unique values Has empty value

──────────────────────────────────────────────────────────

id ('str',) 6403 False

text ('str',) 6030 False Encode sentences

Now encode these 6,403 sentences, it may take 10 seconds or less depending on your GPU and network:

from clip_client import Client

c = Client('grpc://0.0.0.0:51000')

r = c.encode(da, show_progress=True)Download the pre-encoded dataset

Again, for people who are impatient or don't have a GPU, we have prepared a pre-encoded text dataset: ```python from docarray import DocumentArray da = DocumentArray.pull('ttl-textual', show_progress=True, local_cache=True) ```Search via image

Let's load our previously stored image embedding, randomly sample 10 image Documents, then find top-1 nearest neighbour of each.

from docarray import DocumentArray

img_da = DocumentArray.load_binary('ttl-image')

for d in img_da.sample(10):

print(da.find(d.embedding, limit=1)[0].text)Showcase

Fun time! Note, unlike the previous example, here the input is an image and the sentence is the output. All sentences come from the book "Pride and Prejudice".

|

|

|

|

|

|

| Besides, there was truth in his looks | Gardiner smiled | what’s his name | By tea time, however, the dose had been enough, and Mr | You do not look well |

|

|

|

|

|

|

| “A gamester!” she cried | If you mention my name at the Bell, you will be attended to | Never mind Miss Lizzy’s hair | Elizabeth will soon be the wife of Mr | I saw them the night before last |

Rank image-text matches via CLIP model

From 0.3.0 CLIP-as-service adds a new /rank endpoint that re-ranks cross-modal matches according to their joint likelihood in CLIP model. For example, given an image Document with some predefined sentence matches as below:

from clip_client import Client

from docarray import Document

c = Client(server='grpc://0.0.0.0:51000')

r = c.rank(

[

Document(

uri='.github/README-img/rerank.png',

matches=[

Document(text=f'a photo of a {p}')

for p in (

'control room',

'lecture room',

'conference room',

'podium indoor',

'television studio',

)

],

)

]

)

print(r['@m', ['text', 'scores__clip_score__value']])[['a photo of a television studio', 'a photo of a conference room', 'a photo of a lecture room', 'a photo of a control room', 'a photo of a podium indoor'],

[0.9920725226402283, 0.006038925610482693, 0.0009973491542041302, 0.00078492151806131, 0.00010626466246321797]]One can see now a photo of a television studio is ranked to the top with clip_score score at 0.992. In practice, one can use this endpoint to re-rank the matching result from another search system, for improving the cross-modal search quality.

|

|

Rank text-image matches via CLIP model

In the DALL·E Flow project, CLIP is called for ranking the generated results from DALL·E. It has an Executor wrapped on top of clip-client, which calls .arank() - the async version of .rank():

from clip_client import Client

from jina import Executor, requests, DocumentArray

class ReRank(Executor):

def __init__(self, clip_server: str, **kwargs):

super().__init__(**kwargs)

self._client = Client(server=clip_server)

@requests(on='/')

async def rerank(self, docs: DocumentArray, **kwargs):

return await self._client.arank(docs)

Intrigued? That's only scratching the surface of what CLIP-as-service is capable of. Read our docs to learn more.

Support

- Join our Discord community and chat with other community members about ideas.

- Watch our Engineering All Hands to learn Jina's new features and stay up-to-date with the latest AI techniques.

- Subscribe to the latest video tutorials on our YouTube channel

Join Us

CLIP-as-service is backed by Jina AI and licensed under Apache-2.0. We are actively hiring AI engineers, solution engineers to build the next neural search ecosystem in open-source.

-brightgreen?style=flat-square&logo=googlecolab&&logoColor=white)